Building Trust in Citizen Science: A Hierarchical Verification Framework for Biomedical Data Quality

This article presents a comprehensive framework for implementing hierarchical verification systems to ensure the quality and reliability of citizen science data, with a specific focus on applications in biomedical and...

Building Trust in Citizen Science: A Hierarchical Verification Framework for Biomedical Data Quality

Abstract

This article presents a comprehensive framework for implementing hierarchical verification systems to ensure the quality and reliability of citizen science data, with a specific focus on applications in biomedical and clinical research. As the volume of data collected through public participation grows, traditional expert-only verification becomes unsustainable. We explore the foundational principles of data verification, detail methodological approaches including automated validation and community consensus models, address common troubleshooting scenarios, and provide comparative analysis of validation techniques. For researchers and drug development professionals, this framework offers practical strategies to enhance data trustworthiness, enabling the effective utilization of citizen-generated data while maintaining scientific rigor required for research and regulatory purposes.

The Critical Need for Hierarchical Verification in Citizen Science

Data verification is the systematic process of checking data for accuracy, completeness, and consistency after collection and before use, ensuring it reflects real-world facts and is fit for its intended scientific purpose [1] [2] [3]. This process serves as a critical quality control mechanism, identifying and correcting errors or inconsistencies to ensure that data is reliable and can be used for valid analysis [1]. In the specific context of citizen science, verification often focuses on confirming species identity in biological records, a fundamental step for ensuring the dataset's trustworthiness for ecological research and policy development [4].

The integrity of scientific research is built upon a foundation of reliable data. Data verification acts as a cornerstone for this foundation, ensuring that subsequent analyses, conclusions, and scientific claims are valid and trustworthy [1]. Without rigorous verification, research findings are vulnerable to errors that can misdirect scientific understanding, resource allocation, and policy decisions.

Verification in Practice: Approaches for Citizen Science Data

Ecological citizen science projects, which collect vast amounts of data over large spatial and temporal scales, employ a variety of verification approaches. These methods ensure the data is of sufficient quality for pure and applied research. A systematic review of 259 published citizen science schemes identified three primary verification methods [4].

Table 1: Primary Data Verification Approaches in Citizen Science

| Verification Approach | Description | Prevalence | Key Characteristics |

|---|---|---|---|

| Expert Verification | Records are checked post-submission by a domain expert (e.g., an ecologist) for correctness [4]. | Most widely used, especially among longer-running schemes [4]. | Considered the traditional "gold standard," but can be time-consuming and resource-intensive, creating bottlenecks for large datasets [4]. |

| Community Consensus | Records are assessed by the community of participants themselves, often through a voting or peer-review system [4]. | A commonly used alternative to expert verification [4]. | Leverages the "wisdom of the crowd;" scalable but may require mechanisms to manage consensus and ensure accuracy. |

| Automated Approaches | Uses algorithms, such as deep learning classifiers, to verify data automatically [4] [5]. | A growing field, often used in conjunction with other methods in a semi-automated framework [4] [5]. | Offers a high-confidence, scalable solution for large data volumes; can rapidly validate the bulk of records, freeing experts for complex cases [5]. |

A Hierarchical Verification System: Protocol and Workflow

A proposed hierarchical verification system combines the strengths of automated, community, and expert methods to create an efficient and robust workflow [4]. This system is designed to handle large data volumes without sacrificing accuracy.

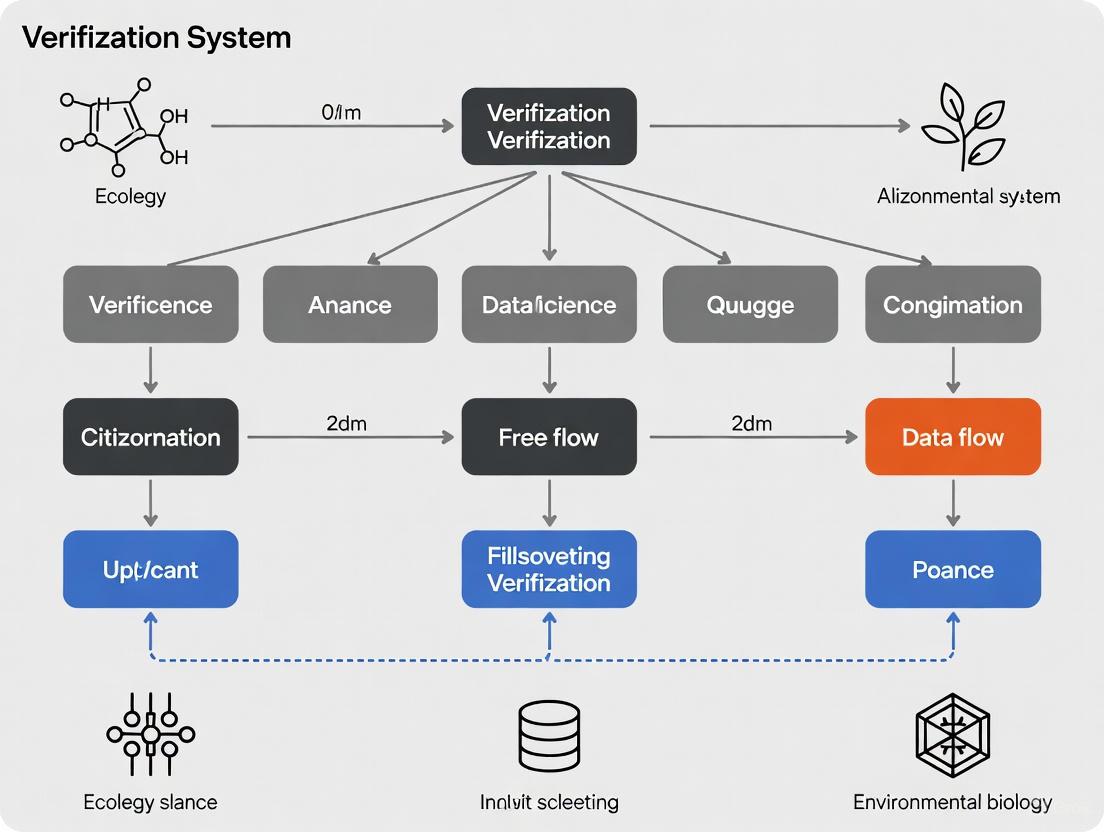

Diagram 1: Hierarchical Verification Workflow

Experimental Protocol: Semi-Automated Validation with Conformal Prediction

This protocol details a modern, scalable method for verifying citizen science records, integrating deep learning with statistical confidence control [5].

I. Objective: To establish a semi-automated validation framework for citizen science biodiversity records that provides rigorous statistical guarantees on prediction confidence, enabling high-throughput data verification.

II. Research Reagent Solutions

Table 2: Essential Materials for Semi-Automated Validation

| Item | Function / Description | Example / Specification |

|---|---|---|

| Deep Learning Classifier | A convolutional neural network (CNN) or similar model for image-based species identification. | Trained on a dataset of pre-validated species images (e.g., 25,000 jellyfish records) [5]. |

| Conformal Prediction Framework | A statistical method that produces prediction sets with guaranteed coverage, adding a measure of confidence to each classification [5]. | Generates sets of plausible taxonomic labels; a singleton set indicates high confidence for automatic acceptance [5]. |

| Calibration Dataset | A held-out set of labeled data used to calibrate the conformal predictor to ensure its confidence levels are accurate [5]. | A subset of the main dataset not used during the initial classifier training. |

| Expert-Validated Gold Standard | A smaller dataset (e.g., 800 records) verified by domain experts to evaluate the framework's performance against the traditional standard [5]. | Used for final accuracy assessment and benchmarking. |

III. Methodology:

Data Preparation and Partitioning:

- Source a large dataset of citizen science records, ideally with associated images and metadata.

- Partition the data into three subsets: a training set (~70%) for model development, a calibration set (~15%) for the conformal prediction, and a test set (~15%) for final evaluation [5].

Model Training and Calibration:

- Training: Train the deep learning classifier on the training set to perform hierarchical taxonomic classification (e.g., from genus to species) [5].

- Calibration: Using the calibration set, run the trained classifier and apply the conformal prediction algorithm. This step calculates non-conformity scores, which determine how to set prediction thresholds to achieve a desired confidence level (e.g., 95%) [5].

Hierarchical Verification and Output:

- Automated Acceptance: Process new, unverified records through the calibrated framework. Records for which the framework outputs a singleton prediction set (only one plausible label) at the desired confidence level are automatically accepted into the verified dataset [5].

- Escalation: Records that result in larger prediction sets (multiple plausible labels) or fall below the confidence threshold are flagged and escalated to the next level of the hierarchy for community or expert verification [4] [5].

The Critical Role of Verification in Scientific Research

Data verification is not a mere technical step but a fundamental component of research integrity. Its importance is multifaceted:

Ensuring Data Integrity and Research Validity: Verification is a critical process for ensuring the data quality and trust necessary for scientific datasets to be used reliably in environmental research, management, and policy development [4]. It confirms that the data accurately reflects the phenomena being studied, thereby supporting sound, evidence-based conclusions [1] [2].

Enabling Robust Analysis: The initial steps of data verification, including checking for duplications, missing data, and anomalies, form the bedrock of quantitative data quality assurance [6]. Clean, verified data is a prerequisite for applying statistical methods correctly, from descriptive statistics to complex inferential models [7] [6].

Building Trust and Supporting Policy: Verified data enhances the credibility of research findings among the scientific community, policymakers, and the public [2]. In citizen science, verification is specifically cited as a key factor in increasing trust in datasets, which is essential for their adoption in formal scientific and policy contexts [4].

The explosion of citizen science initiatives has enabled ecological data collection over unprecedented spatial and temporal scales, producing datasets of immense value for pure and applied research [4]. The utility of this data, however, is governed by the fundamental challenges of the Three V's—Volume, Variety, and Velocity—which constitute the core framework of Big Data [8]. Effectively managing these characteristics is critical for ensuring data quality and building trust in citizen-generated datasets.

Volume refers to the sheer amount of data generated by participants, which can range from terabytes to petabytes. Variety encompasses the diverse types and formats of data encountered, from simple species occurrence records to multimedia content like images and audio. Velocity represents the speed at which data is generated, collected, and processed, often in real-time streams [8]. Within citizen science, these challenges are exacerbated by the decentralized nature of data collection and varying levels of participant expertise, creating an urgent need for robust validation frameworks.

Quantitative Analysis of Citizen Science Data Verification

Current Verification Approaches in Ecological Citizen Science

A systematic review of 259 published citizen science schemes revealed how existing programs manage data quality through verification, the critical process of checking records for correctness (typically species identification) [4]. The distribution of primary verification approaches among 142 schemes with available information is quantified below:

Table 1: Primary Verification Methods in Ecological Citizen Science Schemes

| Verification Method | Prevalence (%) | Typical Application Context |

|---|---|---|

| Expert Verification | Most widely used | Longer-running schemes; critical conservation data |

| Community Consensus | Intermediate | Platforms with active participant communities |

| Automated Approaches | Least widely used | Schemes with standardized digital data inputs |

This analysis indicates that expert verification remains the default approach, particularly among established schemes. However, as data volumes grow, this method becomes increasingly unsustainable, creating bottlenecks that delay data availability for research and decision-making [4].

Performance Metrics for Validation Techniques

Recent research has developed more sophisticated, semi-automated validation frameworks to address the Three V's challenge. One such method, Conformal Taxonomic Validation, uses probabilistic classification to provide reliable confidence measures for species identification [5]. Experimental results demonstrate key performance improvements:

Table 2: Performance Metrics for Hierarchical Validation Techniques

| Performance Metric | Traditional Approach | Conformal Taxonomic Validation |

|---|---|---|

| Validation Speed | Slow (manual processing) | Rapid (algorithmic processing) |

| Scalability | Low (human-expert dependent) | High (computational) |

| Uncertainty Quantification | Qualitative/Implicit | Explicit confidence measures |

| Error Rate Control | Variable | User-set targets (e.g., 5%) |

| Resource Requirements | High (specialist time) | Lower (computational infrastructure) |

This hierarchical approach allows the bulk of records to be verified efficiently through automation or community consensus, with only flagged records undergoing expert review, thus optimizing resource allocation [4].

Application Notes & Protocols

Hierarchical Verification System Protocol

The following workflow implements a tiered verification strategy to manage high-volume, high-velocity citizen science data streams without compromising quality.

Figure 1: A hierarchical workflow for citizen science data verification.

Protocol Steps:

- Automated Pre-validation: Implement initial automated checks for data completeness, format compliance, and geographical plausibility. Records failing these checks are flagged for immediate review.

- Community Consensus Voting: Direct records that pass pre-validation to a community platform where experienced participants vote on identification accuracy. Records achieving a pre-defined consensus threshold (e.g., 95% agreement) advance.

- AI-Powered Identification: Process records using machine learning models (e.g., Conformal Taxonomic Validation) that generate species predictions with confidence scores [5]. Records with high confidence scores are automatically validated.

- Expert Verification: Route records that failed previous stages—due to low community consensus, ambiguous automated identification, or rarity/value of the observation—to domain experts for definitive verification.

- Quality Feedback Loop: Aggregate validation outcomes and performance metrics to continuously refine automated systems and provide targeted training to community participants.

Protocol for Conformal Taxonomic Validation Experiment

This protocol details the implementation of a conformal prediction framework for automated species identification, a core component of the hierarchical system [5].

Figure 2: Experimental workflow for conformal taxonomic validation.

Experimental Procedure:

Data Curation and Preprocessing:

- Source: Obtain citizen science species observation records with images from platforms like iNaturalist or GBIF (Global Biodiversity Information Facility).

- Criteria: Filter records to include only those research-grade observations with expert-verified identifications.

- Split: Randomly partition data into training (60%), calibration (20%), and test sets (20%), ensuring stratified sampling across taxonomic groups.

Model Training and Calibration:

- Architecture: Utilize a deep convolutional neural network (e.g., ResNet-50) pre-trained on ImageNet, with the final layer adapted for the specific number of taxonomic classes.

- Training: Train the model on the training set using standard cross-entropy loss and data augmentation techniques (random flipping, rotation, color jitter).

- Calibration: On the calibration set, compute non-conformity scores for each example. Use these scores to determine the threshold for generating prediction sets that achieve a user-defined coverage rate (e.g., 90% confidence that the true label is included).

Validation and Integration:

- Testing: Apply the calibrated model to the held-out test set. Evaluate based on (a) classification accuracy and (b) efficiency (average size of prediction sets).

- Integration: Deploy the model within the hierarchical framework. Records where the model's prediction set contains only one species (high confidence) are automatically verified. Records with multiple species in the prediction set (low confidence) are flagged for community or expert review.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools and Platforms for Citizen Science Data Management

| Tool Category | Example Solutions | Function in Addressing the 3 V's |

|---|---|---|

| Data Integration & Platforms | Zooniverse, iNaturalist, GBIF | Centralizes data ingestion; manages Variety through standardized formats and Volume via scalable databases [4]. |

| Automated Validation Engines | Conformal Taxonomic Validation Framework [5] | Provides confidence-scored species identification; increases Velocity by automating bulk record processing. |

| Quality Assurance & Documentation | EPA Quality Assurance Handbook & Toolkit [9] | Provides templates and protocols for data quality management, ensuring Veracity across diverse data sources. |

| Cloud & Distributed Computing | Kubernetes, Cloud Services (AWS, GCP) | Enables horizontal scaling to handle data Volume and Velocity via elastic computational resources [8]. |

| Data Governance & Security | Atempo Miria, Data Classification Tools | Ensures regulatory compliance, implements data retention policies, and secures sensitive information [10]. |

| Ethyl oxazole-4-carboxylate | Ethyl oxazole-4-carboxylate, CAS:23012-14-8, MF:C6H7NO3, MW:141.12 g/mol | Chemical Reagent |

| 2-Chloro-5-iodopyridine | 2-Chloro-5-iodopyridine, CAS:69045-79-0, MF:C5H3ClIN, MW:239.44 g/mol | Chemical Reagent |

The Limitations of Traditional Expert-Only Verification Systems

In artificial intelligence, an expert system is a computer system emulating the decision-making ability of a human expert, designed to solve complex problems by reasoning through bodies of knowledge represented mainly as if–then rules [11]. Verification and Validation (VV&E) are critical processes for ensuring these systems function correctly. Verification is the task of determining that the system is built according to its specifications (building the system right), while validation is the process of determining that the system actually fulfills its intended purpose (building the right system) [12].

The complexity and uncertainty associated with these tasks has led to a situation where most expert systems are not adequately tested, potentially resulting in system failures and limited adoption [12]. This application note examines the inherent limitations of traditional expert-only verification approaches and proposes structured methodologies to enhance verification protocols, with particular relevance to hierarchical systems for citizen science data quality.

Core Limitations of Expert-Only Verification Approaches

Traditional verification systems that rely exclusively on domain experts face several significant challenges that compromise their effectiveness and reliability.

Table 1: Key Limitations of Traditional Expert-Only Verification Systems

| Limitation Category | Specific Challenge | Impact on System Reliability |

|---|---|---|

| Knowledge Base Issues | Limited knowledge concentration in carefully defined areas | Today's expert systems have no common sense knowledge; they only "know" exactly what has been input into their knowledge bases [12]. |

| Incomplete or uncertain information | Expert systems will be wrong some of the time even if they contain no errors because the knowledge on which they are based does not completely predict outcomes [12]. | |

| Specification Problems | Inherent vagueness in specifications | If precise specifications exist, it may be more effective to design systems using conventional programming languages instead of expert systems [12]. |

| Methodological Deficiencies | Lack of standardized testing procedures | There is little agreement among experts on how to accomplish VV&E of expert systems, leading to inadequate testing [12]. |

| Inability to detect interactions | Traditional one-factor-at-a-time methods will always miss interactions between factors [13]. | |

| Expert Dependency | Knowledge acquisition bottleneck | Reliance on limited expert availability for system development and verification [12]. |

| Human expert fallibility | Like human experts, expert systems will be wrong some of the time [12]. |

Structured Methodologies for Enhanced Verification

Knowledge Base Partitioning and Analysis

Partitioning large knowledge bases into manageable components is essential for effective verification. This methodology enables systematic analysis of complex rule-based systems.

Table 2: Knowledge Base Partitioning Methodologies

| Methodology | Procedure | Application Context |

|---|---|---|

| Expert-Driven Partitioning | Partition knowledge base using expert domain knowledge | Results in a knowledge base that reflects the expert's conception of the knowledge domain, facilitating communication and maintenance [12]. |

| Function and Incidence Matrix Partitioning | Extract functions and incidence matrices from the knowledge base when expert insight is unavailable | Uses mathematical relationships within the knowledge base to identify logical partitions [12]. |

| Formal Proofs for Small Systems | Apply direct proof of completeness, consistency and specification satisfaction without partitioning | Suitable for small expert systems with limited rule sets [12]. |

| Knowledge Models | Implement high-level templates for expert knowledge (decision trees, flowcharts, state diagrams) | Organizes knowledge to suggest strategies for proofs and partitions; some models have mathematical properties that help establish completeness [12]. |

Experimental Protocol: Knowledge Base Partitioning Verification

- Extract Rule Dependencies: Map all rules and their relationships within the knowledge base using incidence matrices [12].

- Identify Logical Groupings: Apply clustering algorithms to identify naturally occurring rule groupings based on shared variables and dependencies.

- Validate Partition Logic: Present partitioned groups to domain experts for validation of logical consistency.

- Verify Inter-Partition Relationships: Ensure component systems agree among themselves through formal relationship checking [12].

- Execute Component-Level Testing: Verify each partition independently for completeness and consistency.

- Perform Integrated System Testing: Validate the fully integrated system against expected outcomes.

Design of Experiments (DoE) for Validation

DoE provides a statistics-based method for running robustness trials that efficiently identifies factors affecting system performance and detects interactions between variables.

Figure 1: DoE validation workflow for systematic factor testing.

Experimental Protocol: Taguchi DoE for Expert System Validation

- Factor Identification: Identify all factors that could affect system performance (typically 20-30 factors) [13].

- Factor Level Assignment: Assign high and low values for quantitative factors; identify options for qualitative factors.

- Array Selection: Select appropriate Taguchi array (e.g., L12 for efficient testing) to minimize trials while maintaining coverage [13].

- Trial Execution: Execute trials according to the array combinations, recording all performance metrics.

- Interaction Analysis: Analyze results to identify significant main effects and two-factor interactions.

- Robustness Assessment: Determine operating windows where system meets all specifications despite factor variations.

Table 3: Taguchi L12 Array Structure for Efficient Validation

| Trial Number | Factor 1 | Factor 2 | Factor 3 | Factor 4 | Factor 5 | Factor 6 | Factor 7 | Factor 8 | Factor 9 | Factor 10 | Factor 11 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 2 | 1 | 1 | 1 | 1 | 1 | 2 | 2 | 2 | 2 | 2 | 2 |

| 3 | 1 | 1 | 2 | 2 | 2 | 1 | 1 | 1 | 2 | 2 | 2 |

| 4 | 1 | 2 | 1 | 2 | 2 | 1 | 2 | 2 | 1 | 1 | 2 |

| 5 | 1 | 2 | 2 | 1 | 2 | 2 | 1 | 2 | 1 | 2 | 1 |

| 6 | 1 | 2 | 2 | 2 | 1 | 2 | 2 | 1 | 2 | 1 | 1 |

| 7 | 2 | 1 | 2 | 2 | 1 | 1 | 2 | 2 | 1 | 2 | 1 |

| 8 | 2 | 1 | 2 | 1 | 2 | 2 | 2 | 1 | 1 | 1 | 2 |

| 9 | 2 | 1 | 1 | 2 | 2 | 2 | 1 | 2 | 2 | 1 | 1 |

| 10 | 2 | 2 | 2 | 1 | 1 | 1 | 1 | 2 | 2 | 1 | 2 |

| 11 | 2 | 2 | 1 | 2 | 1 | 2 | 1 | 1 | 1 | 2 | 2 |

| 12 | 2 | 2 | 1 | 1 | 2 | 1 | 2 | 1 | 2 | 2 | 1 |

Formal Verification Methods for Rule-Based Systems

Formal methods provide mathematical rigor to the verification process, enabling proof of correctness for critical system components.

Experimental Protocol: Formal Proofs for Knowledge Base Verification

- Rule Transformation: Convert if-then rules into formal logical representations.

- Completeness Checking: Verify that all possible scenarios are addressed by the rule set.

- Consistency Checking: Ensure no contradictory conclusions can be derived from the rule set.

- Termination Verification: Confirm that inference chains will complete in finite time.

- Specification Compliance: Mathematically verify that system behavior matches formal specifications.

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Research Reagents and Solutions for Expert System Verification

| Reagent/Solution | Function/Application | Usage Context |

|---|---|---|

| Knowledge Base Shells | Provides framework for knowledge representation and inference engine implementation [11]. | Development environments for creating and modifying expert systems. |

| Rule Extraction Tools | Automates the process of converting expert knowledge into formal rule structures. | Initial knowledge acquisition and ongoing knowledge base maintenance. |

| Incidence Matrix Generators | Creates mathematical representations of rule dependencies for partitioning analysis [12]. | Knowledge base partitioning and dependency analysis. |

| Statistical Analysis Software | Enables Design of Experiments (DoE) and analysis of factor effects and interactions [13]. | Validation experimental design and results analysis. |

| Formal Verification Tools | Provides automated checking of logical consistency and completeness properties. | Critical system verification where mathematical proof of correctness is required. |

| Visualization Platforms | Creates diagrams for signaling pathways, experimental workflows, and logical relationships. | Communication of complex system structures and processes. |

| 4',5'-Didehydro-5'-deoxyuridine | 4',5'-Didehydro-5'-deoxyuridine, MF:C9H10N2O5, MW:226.19 g/mol | Chemical Reagent |

| Methacrylic anhydride | Methacrylic anhydride, CAS:760-93-0, MF:C8H10O3, MW:154.16 g/mol | Chemical Reagent |

Hierarchical Verification Framework for Citizen Science Applications

Citizen science data quality presents unique challenges that benefit from a hierarchical verification approach, moving beyond traditional expert-only methods.

Figure 2: Hierarchical verification framework for citizen science data.

Experimental Protocol: Implementing Hierarchical Verification

- Define Quality Tiers: Establish multiple tiers of data quality requirements based on intended use.

- Implement Automated Checks: Develop rule-based systems for initial data validation.

- Design Community Verification: Create protocols for peer review within citizen scientist communities.

- Establish Expert Sampling: Define statistical sampling approaches for targeted expert verification.

- Implement Feedback Loops: Create mechanisms for knowledge transfer between tiers.

- Validate System Effectiveness: Measure quality outcomes against traditional expert-only approaches.

Traditional expert-only verification systems present significant limitations in completeness, efficiency, and reliability for complex expert systems. By implementing structured methodologies including knowledge base partitioning, Design of Experiments, formal verification methods, and hierarchical approaches, researchers can overcome these limitations and create more robust, reliable systems. For citizen science data quality research specifically, a hierarchical verification framework that appropriately distributes verification tasks across automated systems, community participants, and targeted expert review provides a more scalable and effective approach than exclusive reliance on expert verification.

Application Notes: Hierarchical Verification for Data Quality

Conceptual Framework and Rationale

The foundation of a hierarchical verification system is to maximize data quality assurance while optimizing the use of expert resources. This approach processes the majority of records through efficient, scalable methods, reserving intensive expert review for complex or uncertain cases [4]. In ecological citizen science, this model has proven essential for managing large-scale datasets collected by volunteers, where traditional expert-only verification becomes a bottleneck [4]. For biomedical applications, this framework offers a robust methodology for validating diverse data types—from community health observations to protein folding solutions—while maintaining scientific rigor and public trust [14].

Verification Approaches: Ecological Evidence and Biomedical Potential

Table 1: Citizen Science Data Verification Approaches

| Verification Method | Implementation in Ecology | Potential Biomedical Application | Relative Resource Intensity |

|---|---|---|---|

| Expert Verification | Traditional default; human experts validate species identification [4]. | NIH peer-review of citizen science grant proposals; validation of complex protein structures in Foldit [14]. | High |

| Community Consensus | Multiple volunteers independently identify specimens; aggregated decisions establish validity [4]. | Peer-validation of environmental health data in the Our Voice initiative; community-based review of patient-reported outcomes [14]. | Medium |

| Automated & Semi-Automated Approaches | Conformal taxonomic validation uses AI and statistical confidence measures for species identification [5]. | Automated validation of data from wearable sensors; AI-assisted analysis of community-submitted health imagery; semi-automated quality checks for Foldit solutions [14]. | Low |

Quantitative Analysis of Verification Methods

Table 2: Portfolio Analysis of NIH-Supported Citizen Science (2008-2022)

| Project Category | Number of Grants | Primary Verification Methods | Key Outcomes |

|---|---|---|---|

| Citizen Science Practice | 71 | Community engagement, bidirectional feedback, participant-directed learning [14]. | Direct public involvement in research process; tools for health equity (Our Voice); protein structure solutions (Foldit) [14]. |

| Citizen Science Theory | 25 | Development of guiding principles, ethical frameworks, and methodological standards [14]. | Established three core principles for public participation; defined criteria for meaningful partnerships in biomedical research [14]. |

Protocols

Protocol: Implementation of a Hierarchical Verification Workflow

Purpose and Scope

This protocol outlines a three-tiered hierarchical system for verifying citizen science data, adaptable for both ecological records and biomedical observations. The procedure ensures efficient, scalable data quality control.

Experimental Workflow

The following diagram illustrates the sequential and iterative process for hierarchical data verification.

Materials and Reagents

Table 3: Research Reagent Solutions for Citizen Science Verification

| Item Name | Function/Application | Specifications/Alternatives |

|---|---|---|

| Mobile Data Collection App | Enables standardized data capture by citizen scientists; ensures consistent metadata collection. | e.g., Stanford Healthy Neighborhood Discovery Tool; must include geo-tagging, timestamp, and data validation prompts [14]. |

| Conformal Prediction Framework | Provides statistical confidence measures for automated data validation; calculates probability of correct classification [5]. | Implementation in Python/R; requires a pre-trained model and calibration dataset; key for Tier 1 automated screening [5]. |

| Community Consensus Platform | Facilitates peer-validation through independent multiple reviews; aggregates ratings for confidence scoring. | Can be built into existing platforms (e.g., Zooniverse) or as standalone web interfaces; requires clear rating criteria [4]. |

| Expert Review Interface | Presents flagged data with context for efficient specialist assessment; integrates automated and community feedback. | Should display original submission, automated scores, and community comments in a unified dashboard to expedite Tier 3 review [4]. |

Protocol: The Our Voice Citizen Science Method for Community Health

Purpose and Scope

This protocol details the Our Voice initiative method for engaging community members in identifying and addressing local health determinants. It demonstrates a successful biomedical application of citizen science with built-in community verification [14].

Experimental Workflow

The following diagram maps the iterative, community-driven process of the Our Voice model.

Materials and Reagents

Table 4: Essential Materials for Our Voice Implementation

| Item Name | Function/Application | Specifications/Alternatives |

|---|---|---|

| Stanford Healthy Neighborhood Discovery Tool | Mobile application for citizens to collect geo-tagged data, photos, and audio notes about community features affecting health [14]. | Required features: GPS tagging, multimedia capture, structured data entry; available on iOS and Android platforms [14]. |

| Community Facilitation Guide | Structured protocol for trained facilitators to lead community discussions about collected data and prioritize issues [14]. | Includes discussion prompts, prioritization exercises, and action planning templates; should be culturally and contextually adapted. |

| Data Integration & Visualization Platform | System for aggregating individual submissions into collective community maps and summaries for discussion [14]. | Can range from simple data dashboards to interactive maps; must present data clearly for community interpretation and decision-making. |

Hierarchical verification systems represent a critical framework for managing data quality in large-scale citizen science projects. These systems strategically allocate verification resources across multiple taxonomic or confidence levels to optimize the balance between operational efficiency and data accuracy. In citizen science, where volunteer-contributed data can scale to hundreds of millions of observations (e.g., 113 million records in iNaturalist, 1.1 billion in eBird), implementing efficient hierarchical validation is essential for maintaining scientific credibility while managing computational and human resource constraints [15]. The core principle involves structuring validation workflows that apply increasingly rigorous verification methods only where needed, creating an optimal trade-off between comprehensive validation and practical feasibility.

The conformal taxonomic validation framework exemplifies this approach through machine learning systems that provide confidence measures for species identifications, allowing automated acceptance of high-confidence records while flagging uncertain classifications for expert review [5]. This hierarchical approach addresses fundamental challenges in citizen science data quality by creating structured pathways for data validation that maximize both throughput and reliability. For conservation planning applications, incorporating hierarchically-verified citizen science data has demonstrated potential to significantly enhance the perceived credibility of conservation prioritizations with only minor cost increases, highlighting the practical value of robust verification systems [16].

Quantitative Framework and Performance Metrics

Hierarchical verification systems require precise quantitative frameworks to evaluate their performance at balancing efficiency and accuracy. The following tables summarize key metrics and standards essential for designing and implementing these systems in citizen science contexts.

Table 1: Key Performance Metrics for Hierarchical Verification Systems

| Metric | Definition | Calculation | Target Range |

|---|---|---|---|

| Automation Rate | Percentage of records resolved without human expert review | (Auto-validated records / Total records) × 100 | 60-80% for optimal efficiency [5] |

| Expert Validation Efficiency | Records processed per expert hour | Total expert-validated records / Expert hours | Project-dependent; should show increasing trend with system refinement |

| Accuracy Preservation | Final accuracy compared to full manual verification | (Hierarchical system accuracy / Full manual accuracy) × 100 | ≥95% for scientific applications [16] |

| Cost-Credibility Trade-off | Increased credibility per unit cost | ΔCredibility / ΔCost | Positive slope; optimal when minor cost increases yield significant credibility gains [16] |

| Uncertainty Resolution Rate | Percentage of uncertain classifications successfully resolved | (Resolved uncertain records / Total uncertain records) × 100 | ≥90% for high-quality datasets |

Table 2: WCAG 2 Contrast Standards for Visualization Components (Applied to Hierarchical System Interfaces)

| Component Type | Minimum Ratio (AA) | Enhanced Ratio (AAA) | Application in Hierarchical Systems |

|---|---|---|---|

| Standard Text | 4.5:1 | 7:1 | Interface labels, instructions, data displays [17] |

| Large Text | 3:1 | 4.5:1 | Headers, titles, emphasized classification results [18] |

| UI Components | 3:1 | Not defined | Interactive controls, buttons, verification status indicators [19] |

| Graphical Objects | 3:1 | Not defined | Data visualizations, confidence indicators, taxonomic pathways [17] |

The metrics in Table 1 enable systematic evaluation of how effectively hierarchical systems balance automation with accuracy, while the visual accessibility standards in Table 2 ensure that system interfaces and visualizations remain usable across diverse researcher and contributor populations. The cost-credibility trade-off metric is particularly significant for conservation applications, where research indicates that incorporating citizen science data with proper validation can enhance stakeholder confidence in conservation prioritizations with only minimal cost implications [16].

Experimental Protocols for System Validation

Protocol: Conformal Prediction for Automated Taxonomic Validation

This protocol outlines the implementation of conformal prediction for hierarchical taxonomic classification, creating confidence measures that enable automated validation of citizen science observations [5].

Materials and Reagents

- Citizen science data records with image and metadata components

- Pre-trained deep learning models for species identification (e.g., hierarchical classification networks)

- Calibration dataset with expert-verified ground truth labels

- Computational infrastructure for model inference and confidence calibration

Procedure

- Data Preparation and Hierarchical Structuring

- Organize taxonomic classes into hierarchical tree structure reflecting biological classification

- Partition dataset into training (60%), calibration (20%), and test (20%) subsets

- Apply data augmentation techniques to address class imbalance in training data

Model Training and Calibration

- Train deep learning models using hierarchical loss functions that incorporate taxonomic relationships

- Compute non-conformity scores using calibration set to measure prediction strangeness

- Set confidence thresholds for each taxonomic level based on desired error rate (typically α=0.05 for 95% confidence)

Hierarchical Prediction and Validation

- For each new observation, generate confidence measures across taxonomic hierarchy

- Automatically accept predictions exceeding confidence thresholds at appropriate taxonomic levels

- Route low-confidence predictions to human experts for verification with uncertainty reason codes

- Implement adaptive threshold adjustment based on expert feedback and error patterns

Validation and Quality Control

- Calculate coverage guarantees to ensure proportion of correct predictions meets confidence levels

- Monitor automation rates and expert workload weekly to optimize threshold settings

- Conduct periodic accuracy audits comparing hierarchical system results against full manual verification

Protocol: Systematic Performance Evaluation of Hierarchical Verification

This protocol provides a standardized methodology for evaluating the efficiency-accuracy trade-offs in hierarchical verification systems, enabling comparative analysis across different implementation approaches.

Materials and Reagents

- Fully verified reference dataset (gold standard)

- Candidate hierarchical verification system implementation

- Computational resources for performance benchmarking

- Statistical analysis software (R, Python with scikit-learn, or equivalent)

Procedure

- Baseline Establishment

- Process entire dataset through full manual verification to establish accuracy baseline

- Calculate resource requirements (time, cost, expert hours) for full verification approach

- Document error patterns and uncertainty distribution across taxonomic groups

Hierarchical System Implementation

- Configure confidence thresholds based on conformal prediction or alternative methods

- Process dataset through hierarchical system, recording automation decisions

- Route uncertain cases to expert verification following system protocols

- Record processing time, resource allocation, and verification outcomes

Comparative Analysis

- Calculate performance metrics from Table 1 for both hierarchical and full verification

- Construct precision-recall curves for automated classification components

- Perform statistical significance testing on accuracy differences (paired t-tests, α=0.05)

- Compute cost-benefit ratios comparing resource requirements against accuracy preservation

Quality Assurance

- Implement blind verification procedures where experts are unaware of automated classifications

- Ensure inter-expert reliability scores exceed 0.8 Cohen's kappa for subjective classifications

- Validate statistical power for detection of practically significant accuracy differences (>2%)

Visualization and Workflow Design

Effective hierarchical verification systems require clear visual representations of their workflows and decision pathways. The following diagrams illustrate core structural and procedural components using the specified color palette while maintaining accessibility compliance.

Diagram 1: Hierarchical Taxonomic Classification Structure

Diagram 2: Hierarchical Verification Decision Workflow

Research Reagent Solutions

The implementation of hierarchical verification systems requires specific computational tools and platforms that enable efficient data processing, model training, and validation workflows. The following table details essential research reagents for establishing citizen science data quality frameworks.

Table 3: Essential Research Reagents for Hierarchical Verification Systems

| Reagent/Platform | Type | Primary Function | Application in Hierarchical Systems |

|---|---|---|---|

| Conformal Prediction Framework | Software Library | Generate confidence measures for predictions | Provides probabilistic confidence scores for automated taxonomic classifications [5] |

| Citizen Science Platform (CSP) | Research Infrastructure | Data collection and volunteer engagement | Serves as data source and implementation environment for hierarchical verification [15] |

| Global Biodiversity Information Facility (GBIF) | Data Repository | Biodiversity data aggregation and sharing | Provides reference data for model training and validation [15] |

| Hierarchical Classification Models | Machine Learning Algorithm | Multi-level taxonomic identification | Core component for automated identification across taxonomic ranks [5] |

| Color Contrast Validator | Accessibility Tool | Verify visual interface compliance | Ensures accessibility of system interfaces and visualizations [19] [17] |

| Species Distribution Models | Statistical Model | Predict species occurrence probabilities | Supports data validation through environmental and spatial consistency checks [16] |

These research reagents collectively enable the implementation of complete hierarchical verification pipelines, from data collection through automated classification to expert review and final publication. The conformal prediction framework is particularly crucial as it provides the mathematical foundation for confidence-based automation decisions, while citizen science platforms offer the technological infrastructure for deployment at scale [5] [15].

Implementing a Multi-Layered Verification Framework: From Theory to Practice

In the context of a hierarchical verification system for citizen science data quality, Tier 1 represents the foundational layer of automated, high-throughput data validation. This tier is designed to handle the enormous volume of data generated by citizen scientists, which often presents challenges related to variable participant expertise and data quality [4]. The core function of Tier 1 is to provide rapid, automated filtering and qualification of species identification records, flagging records with high confidence for immediate use and referring ambiguous cases to higher tiers (e.g., community consensus or expert review) for further verification.

The Conformal Prediction (CP) framework is particularly suited for this task because it provides a statistically rigorous method for quantifying the uncertainty of predictions made by deep learning models. Unlike standard classification models that output a single prediction, conformal prediction generates a prediction set—a collection of plausible labels guaranteed to contain the true label with a user-defined probability (e.g., 90% or 95%) [20] [21]. This property, known as validity, is maintained under the common assumption that the data are exchangeable [20]. For citizen science, this means that an automated system can be calibrated to control the rate of incorrect verifications, providing a measurable and trustworthy level of data quality from the outset.

Theoretical Foundations of Conformal Prediction

Conformal prediction is a framework that can be built on top of any existing machine learning model (termed the "underlying algorithm") to endow it with calibrated uncertainty metrics. The fundamental output is a prediction set, ( C(X{new}) \subseteq \mathbf{Y} ), for a new example ( X{new} ), which satisfies the coverage guarantee: ( P(Y{new} \in C(X{new})) \geq 1 - \alpha ) where ( 1 - \alpha ) is the pre-specified confidence level (e.g., 0.95) [20]. This is achieved through a three-step process:

- Non-Conformity Measure: A function ( A(x, y) ) that quantifies how "strange" or non-conforming a data point ( (x, y) ) is relative to a set of other examples. For a classification model, a common non-conformity measure is ( 1 - fy(x) ), where ( fy(x) ) is the model's predicted probability for the true class ( y ) [20].

- Calibration: The model is applied to a held-out calibration set, generating a non-conformity score for each example in this set.

- Quantile Calculation: The ( (1-\alpha) )-quantile of the non-conformity scores from the calibration set, denoted ( \hat{q} ), is computed.

- Prediction Set Formation: For a new test example ( X{new} ), the prediction set contains all labels ( y ) for which the non-conformity score ( A(X{new}, y) ) is less than or equal to ( \hat{q} ) [20].

This process ensures that the prediction set will contain the true label with probability ( 1-\alpha ). In the context of citizen science, an empty prediction set indicates that the model is too uncertain to make any plausible suggestion, which is a clear signal for the record to be escalated to a higher tier in the verification hierarchy. A prediction set with a single label indicates high-confidence prediction suitable for automated verification, while a set with multiple labels flags the record as ambiguous.

Implementation Protocols

Model and Algorithm Selection

The following table summarizes the core components required for implementing a conformal prediction system for species identification.

Table 1: Core Components for a Conformal Prediction System

| Component | Description | Example Methods & Tools |

|---|---|---|

| Deep Learning Model | A pre-trained model for image-based species classification. | CNN (e.g., ResNet, EfficientNet) fine-tuned on a target taxa dataset [5]. |

| Non-Conformity Score | Measures how dissimilar a new example is from the calibration data. | Least Ambiguous Set Selector (LAPS) [22], Adaptive Prediction Sets (APS) [22], or a simple score like ( 1 - f_y(x) ) [20]. |

| Conformal Library | Software to handle calibration and prediction set formation. | TorchCP (PyTorch-based) [22], MAPIE, or nonconformist. |

| Calibration Dataset | A held-out set of labeled data, representative of the target domain, used to calibrate the coverage. | A curated subset of verified citizen science records from platforms like iNaturalist or GBIF [5] [15]. |

Detailed Experimental Workflow

The workflow for Tier 1 verification can be broken down into a training/calibration phase and a deployment phase. The following diagram illustrates the end-to-end process.

Figure 1: Workflow for Tier 1 Automated Verification. The system is first calibrated on a labeled dataset to establish a statistical threshold (q̂). During deployment, each new record is processed to generate a conformal prediction set, the size of which determines its routing within the hierarchical verification system.

Protocol Steps:

Data Preparation and Model Training

- Source a labeled dataset of species occurrences, such as those available from the Global Biodiversity Information Facility (GBIF) [5].

- Split the data into three parts: a training set, a calibration set, and a test set. A typical split is 70%/15%/15%.

- Train or fine-tune a deep learning model (e.g., a Convolutional Neural Network) on the training set for the task of species classification.

Calibration Phase (One-Time Setup)

- Using the calibration dataset, compute the non-conformity score for each example. For instance, using the APS score: ( A(xi, yi) ) for each calibration example ( (xi, yi) ) [22].

- Sort these scores in ascending order: ( A1, A2, ..., A_n ).

- Compute the calibrated threshold ( \hat{q} ) as the ( \lceil (n+1)(1-\alpha) \rceil / n )-th quantile of these scores [20]. This threshold is saved for the deployment phase.

Deployment and Hierarchical Routing

- For a new citizen science image ( X_{new} ), the deep learning model extracts features and computes probabilities.

- The conformal predictor generates the prediction set ( C(X{new}) ) by including all labels ( y ) for which the non-conformity score ( A(X{new}, y) \leq \hat{q} ) [20].

- The routing decision is made automatically based on the prediction set:

- |C(Xnew)| = 1 (Single species): The record is automatically verified and accepted at Tier 1.

- |C(Xnew)| > 1 (Multiple species): The record is flagged as ambiguous and escalated to Tier 2 for resolution via community consensus [4].

- |C(X_new)| = 0 (Empty set): The record is highly atypical or represents a potential outlier. It is escalated to Tier 3 for review by a domain expert [20].

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools and Libraries for Implementation

| Item | Function / Purpose | Specifications / Examples |

|---|---|---|

| TorchCP Library | A PyTorch-native library providing state-of-the-art conformal prediction algorithms for classification, regression, and other deep learning tasks. | Offers predictors (e.g., Split CP), score functions (e.g., APS, RAPS), and trainers (e.g., ConfTr). Features GPU acceleration [22]. |

| GBIF Datasets | Provides access to a massive, global collection of species occurrence records, which can be used for training and calibrating models. | Datasets can be accessed via DOI; example datasets are listed in the conformal taxonomic validation study [5]. |

| Pre-trained CNN Models | Serves as a robust starting point for feature extraction and transfer learning, reducing training time and computational cost. | Architectures such as ResNet-50 or EfficientNet pre-trained on ImageNet, fine-tuned on a specific taxonomic group. |

| CloudResearch Sentry | A fraud-prevention tool that can be used in a layered QC system to block bots and inauthentic respondents before data enters the system [23]. | Part of a layered quality control approach to ensure the integrity of the data source before algorithmic verification. |

| 3',5'-TIPS-N-Ac-Adenosine | 3',5'-TIPS-N-Ac-Adenosine, MF:C24H41N5O6Si2, MW:551.8 g/mol | Chemical Reagent |

| Pd(II) Mesoporphyrin IX | Pd(II) Mesoporphyrin IX, CAS:40680-45-3, MF:C34H36N4O4Pd, MW:671.1 g/mol | Chemical Reagent |

Validation and Performance Metrics

To evaluate the performance of the Tier 1 verification system, both the statistical guarantees of conformal prediction and standard machine learning metrics should be assessed.

Table 3: Key Performance Metrics for System Validation

| Metric | Definition | Target Value for Tier 1 |

|---|---|---|

| Coverage | The empirical fraction of times the true label is contained in the prediction set. Should be approximately ( 1-\alpha ) [20]. | ≥ 0.95 (for α=0.05) |

| Efficiency (Set Size) | The average size of the prediction sets. Smaller sets indicate more precise and informative predictions [20]. | As close to 1.0 as possible |

| Tier 1 Throughput | The percentage of records automatically verified at Tier 1 (i.e., prediction set size = 1). | Maximize without sacrificing coverage |

| Expert Workload Reduction | The percentage of records that do not require Tier 3 expert review (i.e., prediction set size > 0). | Maximize (e.g., >85%) |

Validation Protocol:

- Use the held-out test set to compute all metrics listed in Table 3.

- Run an ablation study to compare the conformal model against the base deep learning model, demonstrating the improvement in uncertainty quantification.

- Report the distribution of records across the three tiers of the hierarchical system to demonstrate the reduction in workload for human experts. A well-calibrated system should automatically verify a large majority of records, as seen in studies where a hierarchical approach is proposed to manage data volume [4].

Integration within a Hierarchical Verification System

Tier 1 is not designed to operate in isolation. Its effectiveness is maximized when integrated with the broader hierarchical verification framework proposed for citizen science data quality [4]. The core principle is that the conformal prediction framework provides a statistically sound, tunable filter. A higher confidence level ( (1-\alpha) ) will result in larger prediction sets on average, increasing coverage but also increasing the number of records escalated to Tiers 2 and 3. Conversely, a lower confidence level will make Tier 1 more aggressive, automating more verifications but risking a higher rate of misclassification. This trade-off can be adjusted based on the criticality of the data and the resources available for human-in-the-loop verification. This hierarchical approach, where the bulk of records are verified by automation or community consensus and only flagged records undergo expert verification, is considered an ideal system for managing large-scale citizen science data [4].

Within a hierarchical verification system for citizen science data, Tier 2 represents a crucial intermediary layer that leverages the collective intelligence of a community of contributors. This tier sits above fully automated checks (Tier 1) and below expert-led audits (Tier 3), providing a scalable method for improving data trustworthiness [24]. Community consensus techniques are defined as processes where multiple independent contributors review, discuss, and validate individual data records or contributions, leading to a collective judgment on their accuracy and reliability [24] [4]. The core strength of this approach lies in its ability to harness diverse knowledge and perspectives, facilitating the identification of errors, misinformation, or unusual observations that automated systems might miss [24]. In ecological citizen science, for instance, community consensus has been identified as a established and growing method for verifying species identification records [4]. These techniques are vital for enhancing the perceived credibility of both the data and the contributors, forming a reinforcing loop where high-quality contributions build user reputation, which in turn increases the trust placed in their future submissions [24].

Current Approaches and Methodologies

Community consensus manifests differently across various crowdsourcing platforms and disciplines. A systematic review of 259 ecological citizen science schemes revealed that community consensus is a recognized verification method, employed by numerous projects to confirm species identities after data submission [4]. The foundational principle across all implementations is the use of multi-source independent observations to establish reliability through convergence [24].

Common Implementation Models

- Wiki Ecosystems (e.g., Wikipedia, Wikidata): These platforms operationalize community consensus through collaborative editorial processes. Key features include "talk page" discussions where contributors debate the verifiability and neutrality of information, and revision histories that allow the community to revert questionable edits. A core policy supporting this is "no original research," which requires all information to be corroborated by reliable, published sources, forcing consensus around citable evidence [24].

- Specialized Ecological Platforms (e.g., Zooniverse projects, iNaturalist): On these platforms, consensus often emerges from multiple users independently identifying the same species in an uploaded image or dataset. Records that receive consistent identifications from different users are automatically upgraded to a "research-grade" status, indicating a high level of community validation [4].

- Social Media and Review Platforms (e.g., X, Yelp, TripAdvisor): While more susceptible to misinformation, these platforms employ community-driven flags and moderation. Users can report inaccurate content, and the volume of reports or the consensus emerging from user reviews can trigger automated or human review processes [24].

A systematic review of ecological citizen science schemes provides insight into the adoption rate of community consensus relative to other verification methods [4].

Table 1: Verification Approaches in Published Ecological Citizen Science Schemes

| Verification Approach | Prevalence Among Schemes | Key Characteristics |

|---|---|---|

| Expert Verification | Most widely used, especially among longer-running schemes | Traditional default; relies on a single or small number of authoritative figures. |

| Community Consensus | Established and growing use | Scalable; leverages collective knowledge of a contributor community. |

| Automated Approaches | Emerging, with potential for growth | Efficient for high-volume data; often relies on machine learning models. |

Experimental Protocols for Community Consensus

This section provides a detailed, actionable protocol for implementing a community consensus validation system, suitable for research and application in citizen science projects.

Protocol: Multi-Stage Consensus for Ecological Data Validation

1. Objective: To establish a standardized workflow for validating species identification records through community consensus, ensuring data quality for research use.

2. Experimental Workflow:

The following diagram illustrates the hierarchical data verification process, positioning community consensus within a larger framework.

3. Materials and Reagents: Table 2: Research Reagent Solutions for Consensus Validation

| Item | Function/Description |

|---|---|

| Community Engagement Platform (e.g., iNaturalist, Zooniverse, custom web portal) | A web-based platform that allows for the upload of records (images, audio, GPS points) and enables multiple users to view and annotate them. |

| Data Submission Interface | A user-friendly form for contributors to submit observations, including fields for media upload, location, date/time, and initial identification. |

| Consensus Algorithm | A software script (e.g., Python, R) that calculates agreement metrics, such as the percentage of users agreeing on a species ID, and applies a pre-defined threshold (e.g., ≥ 67% agreement) for consensus. |

| User Reputation System Database | A backend database that tracks user history, including the proportion of a user's past identifications that were later confirmed by consensus or experts, generating a credibility score [24]. |

| Communication Module | Integrated email or notification system to alert users when their records are reviewed or when they are asked to review records from others. |

4. Step-by-Step Procedure: 1. Data Ingestion: A participant submits a species observation record via the platform's interface. The record includes a photograph, GPS coordinates, timestamp, and the participant's proposed species identification. 2. Initial Triage (Tier 1): Automated checks verify that all required fields are populated, the media file is not corrupt, and the GPS coordinates are within a plausible range. 3. Community Exposure: The record, now labeled as "Needs ID," is made available in a dedicated queue on the platform for other registered users to examine. 4. Independent Identification: A minimum of three other users (the number can be adjusted based on project size and activity) must provide an independent identification for the record without seeing others' identifications first. 5. Consensus Calculation: The consensus algorithm continuously monitors the record. It compares all proposed identifications. - If ≥ 67% of identifiers (including the original submitter) agree on a species, the record is automatically promoted to "Research Grade" [4]. - If identifications are conflicting or a rare/sensitive species is reported, the algorithm flags the record for Tier 3 expert review. 6. Feedback and Reputation Update: The outcome is communicated to all involved users. The reputation score of each user who provided an identification is updated based on whether their ID aligned with the final consensus or expert decision [24].

5. Analysis and Validation: - Quantitative Metrics: Calculate the percentage of records resolved by community consensus versus those escalated to experts. Monitor the time-to-consensus for records. - Quality Control: Periodically, take a random sample of "Research Grade" records and have an expert blindly validate them to measure the error rate of the community consensus process. - Data Output: The final, validated dataset should include the agreed-upon identification and a confidence metric derived from the level of consensus (e.g., 70% vs. 100% agreement).

Advanced and Emerging Protocols

As data volumes grow, purely manual community consensus can become inefficient. Emerging solutions integrate automation to create hierarchical or semi-automated systems.

Protocol: Semi-Automated Conformal Taxonomic Validation

This protocol is adapted from recent research on using machine learning to support the validation of taxonomic records, representing a cutting-edge fusion of Tiers 1 and 2 [5].

1. Objective: To create a scalable, semi-automated validation pipeline that uses a deep-learning model to suggest identifications and conformal prediction to quantify the uncertainty of each suggestion, flagging only low-certainty records for community or expert review.

2. Experimental Workflow:

3. Materials and Reagents: Table 3: Research Reagent Solutions for Semi-Automated Validation

| Item | Function/Description |

|---|---|

| Pre-Trained Deep Learning Model (e.g., CNN for image classification) | A model trained on a large, verified dataset of species images (e.g., from GBIF) capable of generating a probability distribution over possible species. |

| Conformal Prediction Framework | A statistical software package (e.g., in Python) that uses a "calibration set" of known data to output prediction sets—a set of plausible labels for a new data point—with a guaranteed confidence level (e.g., 90%) [5]. |

| Calibration Dataset | A curated hold-out set of data with known, correct identifications, used to calibrate the conformal predictor and ensure its confidence measures are accurate. |

| Uncertainty Threshold Configurator | A project-defined setting that determines what constitutes a "certain" prediction (e.g., a prediction set containing only one species) versus an "uncertain" one (a prediction set with multiple species). |

4. Step-by-Step Procedure: 1. Model Training and Calibration: A deep-learning model is trained on a vast corpus of validated species images. A separate, held-aside calibration dataset is used to configure the conformal prediction framework [5]. 2. Record Processing: A new, unvalidated species image is submitted to the platform. 3. Model Prediction and Uncertainty Quantification: The image is processed by the deep-learning model. Instead of just taking the top prediction, the conformal prediction framework generates a prediction set—a list of all species the model considers plausible for the image at a pre-defined confidence level (e.g., 90%) [5]. 4. Automated Decision Gate: - If the prediction set contains only a single species, the record is automatically validated and marked with a high-confidence flag. This may account for a large majority of common species. - If the prediction set is empty or contains multiple species, the model is uncertain. The record is automatically flagged and routed to the community consensus queue (Tier 2) for human intervention. 5. Community Refinement: The community of users works on the flagged records, using the model's uncertain prediction set as a starting point for their discussion and identification. 5. Feedback Loop: Records resolved by the community can be fed back into the model's training data to iteratively improve its performance and reduce the number of records requiring manual review over time.

This hierarchical approach, where the bulk of common records are verified by automation and only uncertain records undergo community consensus, maximizes verification efficiency [4] [5].

Within a hierarchical verification system for citizen science data, Tier 3 represents the most advanced level of scrutiny, designed to resolve ambiguous cases and ensure the highest possible data quality. This tier leverages expert knowledge, advanced statistical methods, and rigorous protocols to adjudicate records that automated processes (Tier 1) and community consensus (Tier 2) have failed to verify with high confidence. The implementation of this tier is critical for research domains where data accuracy is paramount, such as in biodiversity monitoring for drug discovery from natural compounds or in tracking epidemiological patterns. This document outlines the application notes and detailed experimental protocols for establishing and operating a Tier-3 expert review system.

Quantitative Framework for Expert Review

A Tier-3 system relies on a quantitative foundation to identify candidate records for expert review and to calibrate the confidence of its decisions. The following metrics and statistical methods are central to this process.

Key Quantitative Metrics for Flagging Complex Cases

Records are escalated to Tier 3 based on specific, measurable criteria that indicate uncertainty or high stakes. The table below summarizes the primary quantitative triggers for expert review.

Table 1: Quantitative Triggers for Tier 3 Expert Review Escalation

| Trigger Category | Metric | Calculation / Threshold | Interpretation |

|---|---|---|---|

| Consensus Failure | Low Consensus Score | < 60% agreement among Tier 2 validators | Indicates high ambiguity that cannot be resolved by community input alone [5]. |

| Predictive Uncertainty | High Conformal Prediction P-value | P-value > 0.80 for top candidate species | Machine learning model is highly uncertain; multiple species are almost equally probable [5]. |

| Data Rarity / Impact | Novelty Score | Record is > 3 standard deviations from the norm for a given region/season | Potential for a rare, invasive, or range-shifting species that requires expert confirmation [15]. |

| Conflict Indicator | High Expert Disagreement Index | >30% disagreement rate among a panel of 3+ experts on a given record | Flags records that are inherently difficult and require a formalized arbitration process [15]. |

Statistical Validation of Expert Performance

The reliability of the Tier 3 system itself must be quantitatively monitored. Conformal prediction offers a robust framework for providing valid confidence measures for each expert's classifications, ensuring the quality assurance process is itself assured [5].

Table 2: Performance Benchmarks for Tier 3 Expert Review System

| Performance Indicator | Target Benchmark | Measurement Frequency | Corrective Action if Target Not Met |

|---|---|---|---|

| Expert Agreement Rate (Cohen's Kappa) | κ > 0.85 | Quarterly | Provide additional training on taxonomic keys for problematic groups [5]. |

| Average Review Time per Complex Case | < 15 minutes | Monthly | Optimize decision support tools and user interface for expert portal. |

| Rate of Data Publication to GBIF | > 95% of resolved cases within 48 hours | Weekly | Automate data export workflows and streamline API integrations [15]. |

| Predictive Calibration Error | < 5% difference between predicted and empirical confidence levels | Biannually | Recalibrate the underlying conformal prediction model with new expert-validated data [5]. |

Experimental Protocols

Protocol 1: Conformal Prediction for Expert Calibration and Uncertainty Quantification

This protocol details the use of conformal prediction to generate predictive sets with guaranteed coverage for species identification, providing experts with a calibrated measure of machine-generated uncertainty.

1. Purpose: To quantify the uncertainty of automated species identifications from Tier 1 and present this information to Tier 3 experts in a statistically valid way, thereby focusing expert attention on the most plausible candidate species.

2. Methodology:

- Input: An image and/or metadata for an observation flagged for expert review.

- Pre-processing: The input data is transformed into a feature vector using a pre-trained deep neural network (e.g., ResNet-50) [5].

- Model & Calibration: A pre-calibrated conformal predictor is used. This predictor is trained and calibrated on a large, historical dataset of expert-verified citizen science records (e.g., from iNaturalist or GBIF) [5].

- Prediction Set Generation: For a new observation, the conformal predictor outputs a set of possible species classifications (not a single guess) with a pre-defined confidence level (e.g., 90%). This set contains the true species with a probability of 90%. A large set indicates high model uncertainty.

- Output: The prediction set, along with the p-values for each candidate species, is presented to the expert reviewer. A small, high-confidence set allows the expert to quickly confirm. A large set clearly signals the need for careful discrimination between several options.

Protocol 2: Blinded Expert Adjudication for Conflict Resolution

This protocol establishes a formal process for resolving cases where initial expert reviews are in conflict, ensuring an unbiased and definitive outcome.

1. Purpose: To resolve discrepancies in species identification from multiple Tier 3 experts, thereby producing a single, authoritative validation decision for high-stakes records.

2. Methodology:

- Case Identification: A record is entered into this protocol when the Expert Disagreement Index (see Table 1) exceeds a predefined threshold.

- Expert Panel Selection: A panel of at least two additional experts, who were not involved in the initial review, is assembled. These experts are blinded to the identities and rationales of the initial reviewers.

- Independent Review: Each panelist independently reviews the record using the standard Tier 3 decision support tools.

- Arbitration Meeting: If the panelists agree with each other, their consensus decision is final. If the panelists disagree, a moderated arbitration meeting is held where each expert presents evidence for their classification. The goal is to reach a consensus.

- Final Decision: If consensus is reached, the record is validated accordingly. If not, the record is marked as "Unresolvable" and may be flagged for future research or collection of additional evidence. The entire process is documented for system improvement.

Visualization of Tier 3 Workflow

The following diagram illustrates the logical flow and decision points within the Tier 3 expert review system.

The Scientist's Toolkit: Research Reagent Solutions

This section details the essential computational and data resources required to implement and operate a Tier 3 expert review system.

Table 3: Essential Research Reagents for a Tier 3 Expert Review System

| Tool / Resource | Type | Function in Tier 3 Process | Example / Note |

|---|---|---|---|

| Conformal Prediction Framework | Software Library | Provides statistically valid confidence measures for machine learning classifications, quantifying uncertainty for experts [5]. | Custom Python code as described in [5]; can be built upon libraries like nonconformist. |

| Global Biodiversity Information Facility (GBIF) | Data Infrastructure | Provides the reference dataset for calibrating models and serves as the ultimate repository for validated records [15]. | Use DOIs: 10.15468/dl.5arth9, 10.15468/dl.mp5338 for specific record collections [5]. |

| Quantum Annealing-based Graph Coloring | Advanced Algorithm | Can be used to optimize expert workload assignment, ensuring no expert is assigned conflicting cases or is over-burdened [25]. | Implementation as documented in the graph_coloring algorithm; can be used for task scheduling [25]. |

| High-Contrast Visualization Palette | Design Standard | Ensures accessibility and clarity in decision-support tools and dashboards used by experts, reducing cognitive load and error [26]. | Use shades of blue for primary data (nodes) and complementary colors (e.g., orange) for highlighting links/actions [26]. |

| Citizen Science Platform (CSP) API | Software Interface | Enables seamless data exchange between the Tier 3 review interface and the broader citizen science platform (e.g., for escalation and final publication) [15]. | iNaturalist API or custom-built APIs for proprietary platforms. |

| Fmoc-Asp(OtBu)-Ser(Psi(Me,Me)pro)-OH | Fmoc-Asp(OtBu)-Ser(Psi(Me,Me)pro)-OH, CAS:955048-92-7, MF:C29H34N2O8, MW:538.6 g/mol | Chemical Reagent | Bench Chemicals |

| L-Phenylalanine-13C9,15N | L-Phenylalanine-13C9,15N, CAS:878339-23-2, MF:C9H11NO2, MW:175.117 g/mol | Chemical Reagent | Bench Chemicals |

Leveraging Hierarchical Classification for Taxonomic Validation

This application note presents a structured framework for implementing hierarchical classification to enhance taxonomic validation in citizen science. With data quality remaining a significant barrier to the scientific acceptance of citizen-generated observations, we detail a protocol that integrates deep-learning models with conformal prediction to provide reliable, scalable species identification. The methodologies and validation techniques described herein are designed to be integrated into a broader hierarchical verification system for citizen science data quality, ensuring robust datasets for ecological research and monitoring.

Citizen science enables ecological data collection over immense spatial and temporal scales, producing datasets of tremendous value for pure and applied research [4]. However, the accuracy of citizen science data is often questioned due to issues surrounding data quality and verification—the process of checking records for correctness, typically by confirming species identity [4]. In ecological contexts, taxonomic validation is this critical verification process that ensures species identification accuracy.

As the volume of data collected through citizen science grows, traditional approaches like expert verification, while valuable, become increasingly impractical [4]. Hierarchical classification offers a sophisticated solution by mirroring biological taxonomies, where identifications are made through a structured tree of decisions from broad categories (e.g., family) to specific ones (e.g., species). This approach enhances accuracy and computational efficiency. When combined with modern probabilistic deep-learning techniques, it creates a powerful framework for scalable data validation suitable for integration into automated and semi-automated verification systems [5].

Core Methodological Framework

Hierarchical Classification with Conformal Prediction

The proposed framework integrates hierarchical classification with conformal prediction to provide statistically calibrated confidence measures for taxonomic identifications [5].

- Deep Taxonomic Networks: Utilize deep latent variable models to automatically discover taxonomic structures and prototype clusters directly from unlabeled data. These networks optimize a complete binary tree-structured mixture-of-Gaussian prior within a variational inference framework, learning rich and interpretable hierarchical taxonomies that capture both coarse-grained semantic categories and fine-grained visual distinctions [27].